Second perhaps only to the EAU’s dedicated Uro-Technology section ESUT, ERUS prides itself on bringing together the greatest innovators in urological robotic surgery. The Technology Forum was added to the Annual Meeting’s scientific programme in recent years, allowing manufacturers, engineers and surgeons to present new developments and exchange views on where the field needs to go in the coming years.

At ERUS19 in Lisbon, the symposium fell on the first day of the meeting, before the main scientific programme started. The auditorium was nevertheless completely packed when the Technology Forum took place.

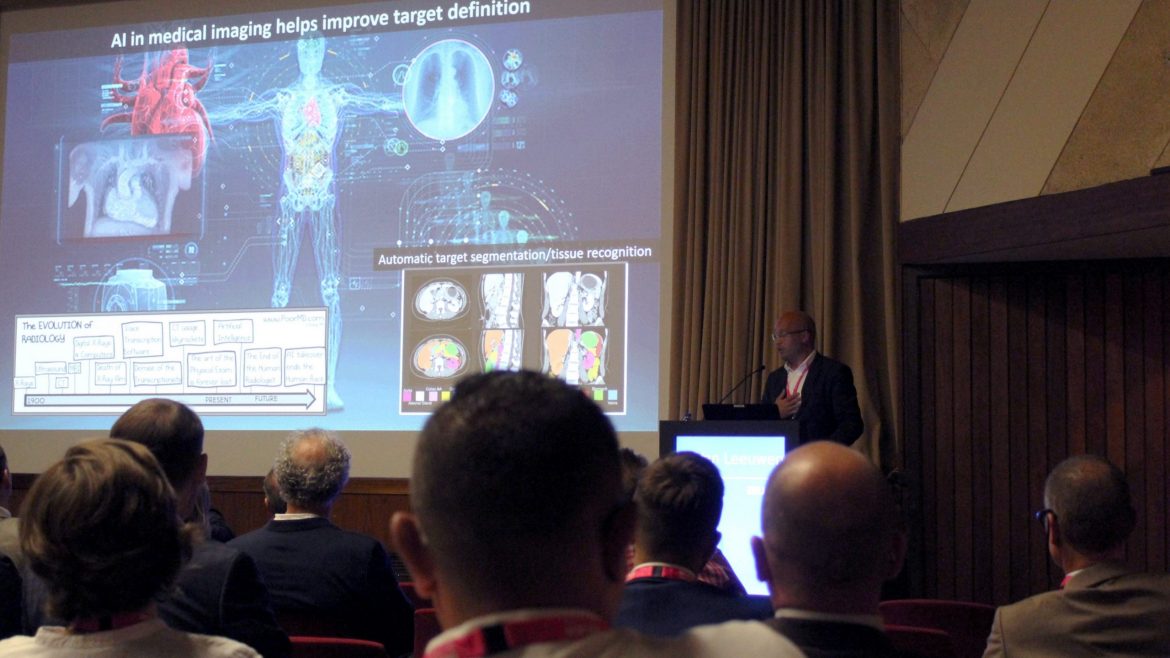

Dr. Fijs Van Leeuwen of Leiden University Medical Centre and ORSI gave an engineer’s view on the potential for AI in assisting or even conducting surgery in the (near) future. In a centuries-long attempt to “move surgery beyond butchering”, the so-called fourth industrial revolution is now beginning to transform surgery and medical care in general. The first steps are being made machine learning through pattern recognition and deep learning through neural networks.

Training the robot

“We’ve not quite made the leap to deep learning with robotic surgery yet,” said Dr. Van Leeuwen. “We are still determining how to train the robot and programmes in the correct manner. We would like to see AI acting as a servant to the surgeon, assisting in tumour identification and in matching pre-operative images to the patient.”

“Radiologists are already starting to fear for their jobs due to AI. As there is no movement or displacement of the images during their analysis, they are relatively easier to replace than surgeons.” Dr. Van Leeuwen showed a demonstration of the skeleton and lymph nodes dynamically projected onto a patient, corrected for movement.

“We can teach the computer. As engineers we need to combine all available information and present it in a useful, non-intrusive way, closely integrated into robotic systems. But will the public accept autonomous surgical robots when self-driving cars still crash?”

Prof. Prokar Dasgupta offered a surgeon’s view, pointing out how even people’s smartphones are already unobtrusively scanning and identifying faces and objects. “Basically, the introduction of AI and machine learning into surgery is using data to answer questions.” Prof. Dasgupta also briefly explained the concept of backpropagation, the method that is used to train neural networks.

Some results that show that machines can measure parameters that go beyond conventional human perception include the ranking of surgeons by ability rather than experience/seniority alone. He cited a study by Dr. Andrew Hung, which used machine learning to identify objective performance metrics for robot-assisted radical prostatectomy. The machine was able to spot natural aptitude also at low volumes. Other algorithms are being trained based on the work of radiologist and pathologist pairings, showing promising preliminary results.

Some discussion followed the two complementary talks, mainly about the timeframe in which robots could become active participants in surgery. Navigating tissue layers was considered to be the largest hurdle by the speakers. Deformation of tissue is the biggest challenge, as different tissues have different levels of stretch, which also differ from patient to patient making it difficult to teach a machine the subtleties of surgery.

Dr. Van Leeuwen admitted that he was fascinated but also scared by the potential of autonomous surgical robots. “All robotic surgery-related companies are just starting out in this field. They are at the moment still defining training sets. I personally think ERUS should be defining these,” said Van Leeuwen.

Prof. Mahendra Bhandari added to the discussion with some observations: “Surgeons are the gatekeepers of the necessary data. By combining the available data of five million robotic surgeons [similar to how Tesla uses its cars’ data], we can build a model. This requires change of mindset and of culture. Data capture better is better than data collection as it can feed dynamic models that constantly adjust and feed back.”

New technologies

Further talks came from Dr. Jonathan Sorger, Vice President of Research at Intuitive, a second appearance by Prof. Dasgupta and a report of Prof. Alan McNeill of his first experiences of using the CMR Versius system on a cadaver.

Dr. Sorger gave an overview of how Intuitive’s systems were developed and have evolved over the past twenty years, hinting at roads not taken and explaining certain design decisions. Responding to an audience question by Dr. Henk Van der Poel, Sorger postulated that companies are not yet standardizing their surgical robots’ interfaces because there is still a lot of commercial competition in the field and that the market had not yet matured to a “commodity” phase.

Prof. Dasgupta shared the PROCEPT Biorobotics automated Aquablation system, tipping his hat to the pioneering work by John Wickham 30 years earlier. Prof. McNeill closed the session with his report from his recent first experiences with the Versius system on a visit to Cambridge. The videos of his team’s work show his very first hands-on experience with the system, after a short simulation training. McNeill commented on the differences between this upcoming system and the more established systems, pointing out some similarities to laparoscopic procedures, a different control interface and the use of glasses instead of a console.